CLOSE

About Elements

田中贵金属是贵金属领域的翘楚企业。

支撑社会发展的先进素材和解决方案、

创造了这些的开发故事、技术人员们的心声、以及经营理念和愿景——

Elements是以“探求贵金属的极致”为标语,

为促进实现更加美好的社会和富饶的地球未来传播洞察的网络媒体。

Google is testing a new robot that can program itself

Top Image: Charlotte Hu

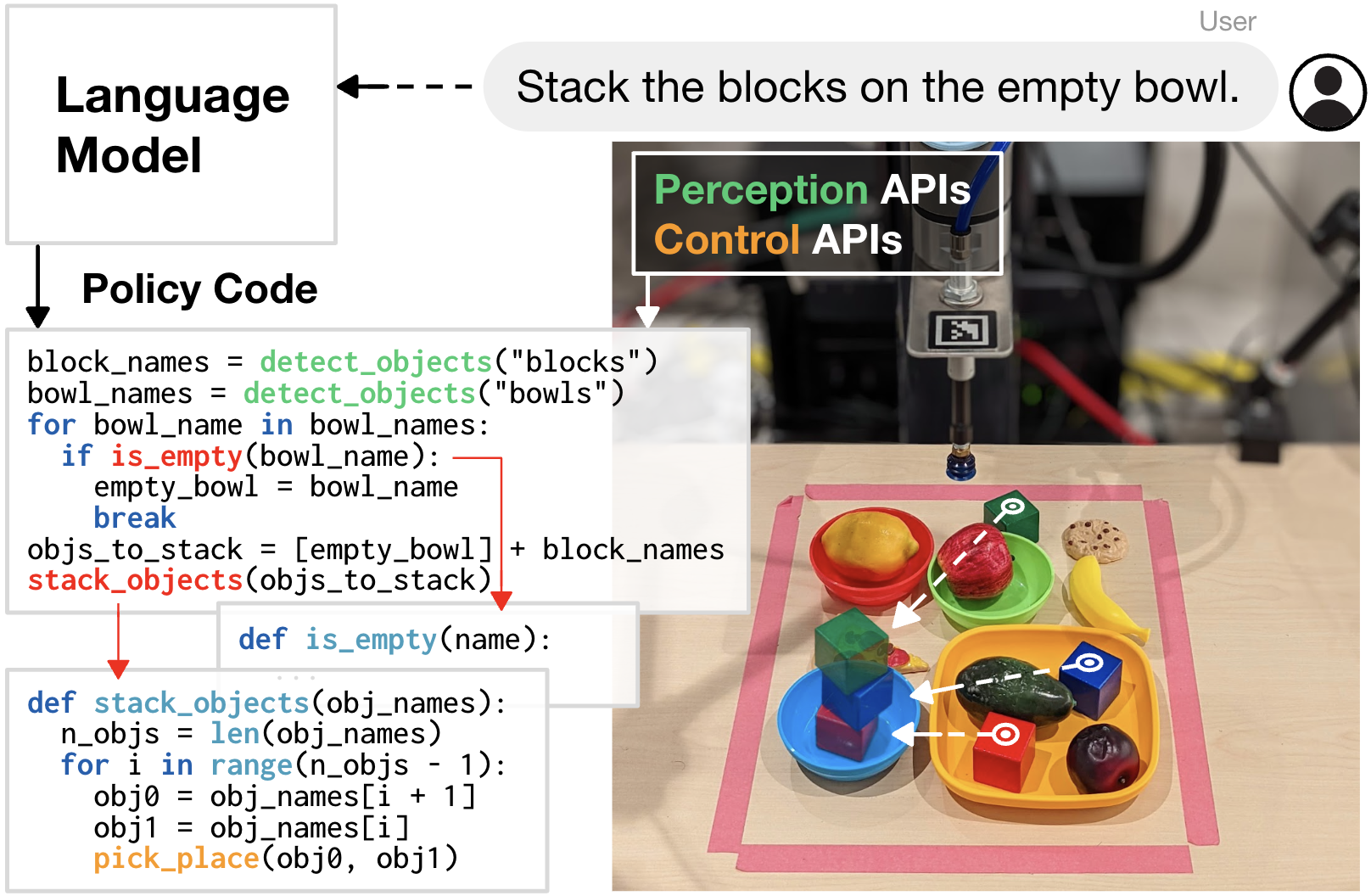

Writing working code can be a challenge. Even relatively easy languages like HTML require the coder to understand the specific syntax and available tools. Writing code to control robots is even more involved and often has multiple steps: There’s code to detect objects, code to trigger the actuators that move the robot’s limbs, code to specify when the task is complete, and so on. Something as simple as programming a robot to pick up a yellow block instead of a red one is impossible if you don’t know the coding language the robot runs on.

But Google’s robotics researchers are exploring a way to fix that. They’ve developed a robot that can write its own programming code based on natural language instructions. Instead of having to dive into a robot’s configuration files to change block_target_color from #FF0000 to #FFFF00, you could just type “pick up the yellow block” and the robot would do the rest.

Code as Policies (or CaP for short) is a coding-specific language model developed from Google’s Pathways Language Model (PaLM) to interpret the natural language instructions and turn them into code it can run. Google’s researchers trained the model by giving it examples of instructions (formatted as code comments written by the developers to explain what the code does for anyone reviewing it) and the corresponding code. From that, it was able to take new instructions and “autonomously generate new code that re-composes API calls, synthesizes new functions, and expresses feedback loops to assemble new behaviors at runtime,” Google engineers explained in a blog post published this week, In other words, given a comment-like prompt, it could come up with some probable robot code. Read the preprint of their work here.

Google AI

Google AI

To get CaP to write new code for specific tasks, the team provided it with “hints,” like what APIs or tools were available to it, and a few instructions-to-code paired examples. From that, it was able to write new code for new instructions. It does this using “hierarchical code generation” which prompts it to “recursively define new functions, accumulate their own libraries over time, and self-architect a dynamic codebase.” This means that given one set of instructions once, it can develop some code that it can then repurpose for similar instructions later on.

[Related: Google’s AI has a long way to go before writing the next great novel]

CaP can also use the arithmetic operations and logic of specific languages. For example, a model trained on Python can use the appropriate if/else and for/while loops when needed, and use third-party libraries for additional functionality. It can also turn ambiguous descriptions like “faster” and “to the left” into the precise numerical values necessary to perform the task. And because CaP is built on top of a regular language model, it has a few features unrelated to code—like understanding emojis and non-English languages.

For now, CaP is still very much limited in what it can do. It relies on the language model it is based on to provide context to its instructions. If they don’t make sense or use parameters it doesn’t support, it can’t write code. Similarly, it apparently can only manage a handful of parameters in a single prompt; more complex sequences of actions that require dozens of parameters just aren’t possible. There are also safety concerns: Programming a robot to write its own code is a bit like Skynet. If it thinks the best way to achieve a task is to spin around really fast with its arm extended and there is a human nearby, somebody could get hurt.

Still, it’s incredibly exciting research. With robots, one of the hardest tasks is generalizing their trained behaviors. Programming a robot to play ping-pong, doesn’t make it capable of playing other games like baseball or tennis. Although CaP is still miles away from such broad real world applications, it does allow a robot to perform a wide range of complex robot tasks without task-specific training. That’s a big step in the direction of one day being able to teach a robot that can play one game how to play another—without having to break everything down to new human-written code.

The post Google is testing a new robot that can program itself appeared first on Popular Science.

This article originally appeared on Popular Science

This article was written by Harry Guinness from Popular Science and was legally licensed through the Industry Dive Content Marketplace. Please direct all licensing questions to legal@industrydive.com.